By 2027, AI systems without role-based orchestration will be as outdated as monolithic web applications are today.

Right now, most teams building AI applications are focused on the wrong problem. They're chasing more powerful models, larger context windows, and better reasoning capabilities, assuming that if the core LLM gets smart enough, everything else will fall into place. But that's not how the most capable AI systems work. And it's not how intelligence, human or artificial, has ever worked.

The real breakthrough isn't coming from smarter models. It's coming from better orchestration.

The Monolithic Trap

When most engineers think about building an AI application, they imagine something like this: user sends a request → LLM processes it → system returns a response. One model, one pass, done. This works fine for simple tasks. But it breaks down the moment you need the system to retrieve and reason over fresh information, plan multi-step tasks that depend on real-world state, validate outputs before taking action, adapt when things go wrong mid-execution, or learn from past interactions.

Teams hit these limitations and their first instinct is: "We need a better model." So they wait for GPT-5, or Claude 4, or whatever comes next. That's the wrong instinct.

Intelligence Isn't a Single Thing

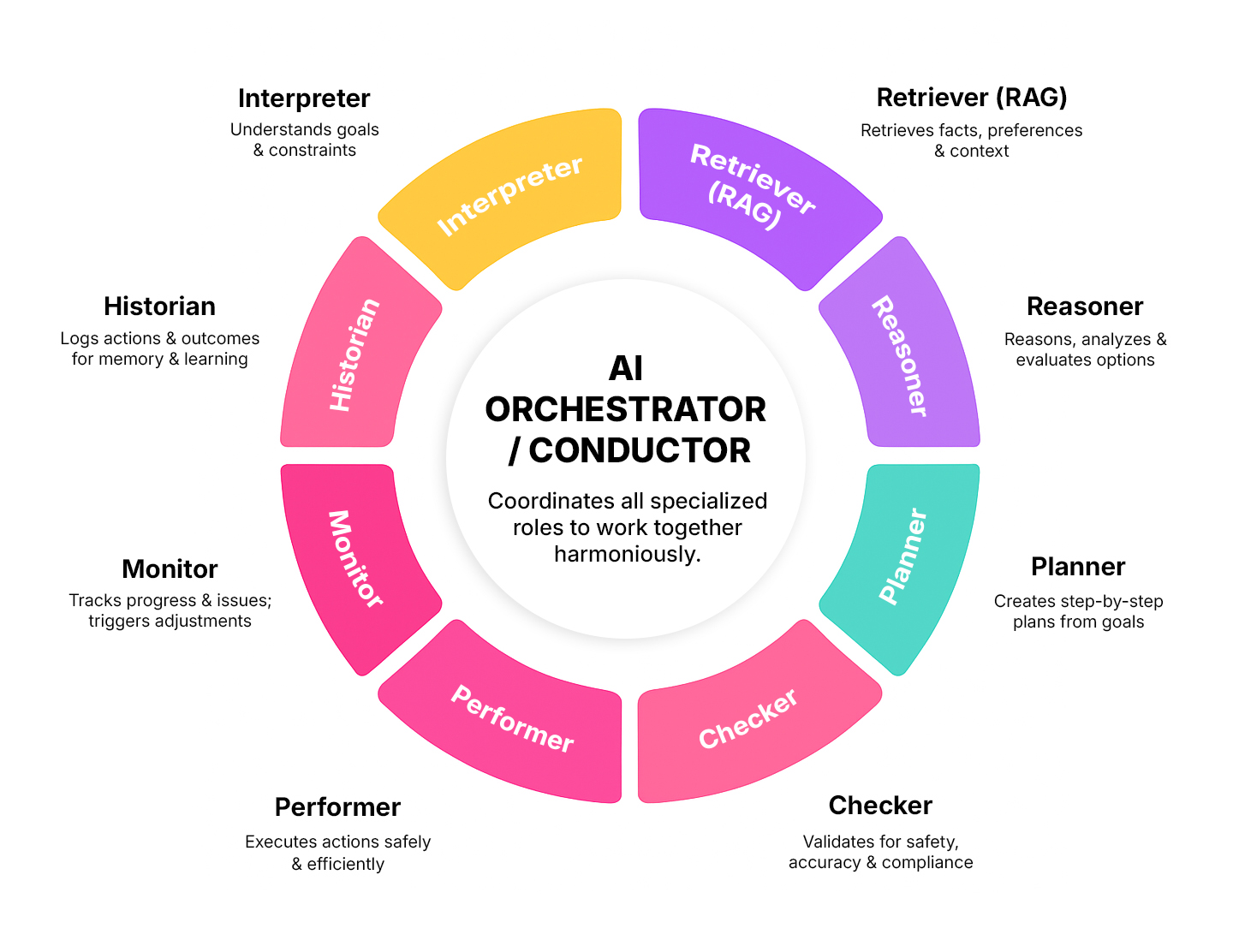

Here's what we've learned building production AI systems: intelligence doesn't come from one powerful component doing everything. It comes from multiple intelligent components, each specialized in their domain, coordinating seamlessly. Think about how your brain actually works when you book a flight online. Your memory acts like the Retriever, instantly recalling your preferred airlines, past trips, and traveler details. Your attention acts like the Interpreter, focusing on key constraints-price, timing, connections-and understanding what success looks like. Your accumulated experience acts like the Reasoner, drawing on past patterns to compare tradeoffs, evaluate which options typically work best, and weigh morning vs evening, direct vs layover, cost vs convenience. Your caution acts like the Checker, scanning for problems: tight connections, unreliable airlines, hidden fees. Your motor control acts like the Performer, executing the booking-filling forms, selecting seats, entering payment. Your awareness acts like the Monitor, adapting to changes-price updates, availability shifts, errors. Even though you experience this as one smooth task, your brain is running multiple specialized systems in parallel, each contributing something the others can't.

Next-generation AI systems work the same way.

Instead of one monolithic model, these systems distribute intelligence across specialized roles, where each role has a distinct responsibility and contributes something essential to the overall intelligence of the system.

- The Interpreter understands what you actually want, parsing intent, identifying constraints, defining success criteria. It's the role that turns "book me a flight to New York next week" into structured requirements the rest of the system can work with.

- The Retriever (RAG) retrieves the right information at the right time: past decisions, user preferences, up-to-date facts, relevant context. Without this role, the system can only rely on what was learned during training, which quickly becomes outdated.

- The Reasoner reasons about the problem, understanding tradeoffs, evaluating options, generating plans. This is the part that thinks and analyzes, but critically, it doesn't act on its own.

- The Researcher gathers possibilities, querying external systems, collecting data, exploring what's available. It goes out into the world to find options, bringing back raw data that other roles can filter and evaluate.

- The Planner sequences the work, breaking goals into steps, ordering dependencies, mapping the path forward. It takes a high-level objective and creates an actionable roadmap for achieving it.

- The Checker validates before action, ensuring safety, compliance, correctness, and alignment with constraints. This is the role that prevents costly mistakes by reviewing plans before they're executed.

- The Performer executes the plan, calling APIs, submitting forms, making changes in the real world. While other roles think and plan, the Performer is the one that actually makes things happen.

- The Monitor watches for problems, tracking progress, catching errors, triggering retries or escalations. It's constantly vigilant during execution, ready to adapt when reality doesn't match expectations.

- The Historian documents everything, creating audit trails, enabling learning, building institutional memory. This role ensures the system can explain what happened, learn from mistakes, and improve over time.

Think of it like an orchestra. No single musician carries the performance. The violins don't decide when the horns come in. The conductor doesn't play every instrument. But when they coordinate properly, the result is something no individual could create alone. The magic isn't in any one instrument. It's in the harmony between them.

Why This Matters Now

We're at an inflection point. The first wave of AI applications was about access, giving people a chat interface to a powerful model. That was table stakes. The next wave is about composition, systems that can handle complexity by distributing work across specialized roles that coordinate dynamically.

This isn't theoretical. We're seeing this pattern emerge across research assistants that can plan multi-day investigations, coding agents that can architect, implement, test, and deploy, customer service systems that can handle escalations, research history, and take corrective action, and financial systems that can analyze, validate, execute, and reconcile transactions. What makes these systems work isn't just a better LLM. It's the orchestration layer.

The Three Ways Teams Get This Wrong

1. The "Smarter Model Will Fix It" Fallacy

Teams hit a limitation and assume they need GPT-5. But the problem isn't the model's intelligence-it's that they're asking one component to do too many jobs. A Researcher shouldn't also be the Checker. A Planner shouldn't also execute. Separation of concerns matters in AI systems just like it does in software architecture.

2. The Rigid Pipeline

Teams build: Step 1 → Step 2 → Step 3, treating the AI system like traditional ETL. But real intelligence requires continuous feedback. Plans change mid-execution. New information arrives. Risks get reassessed. Systems that can't adapt will fail in production.

3. The Context Overload

Teams dump everything into the LLM's context window and hope it figures it out. But that's like having one person in a meeting responsible for remembering everything, planning everything, and executing everything. It's inefficient and error-prone. Specialized roles with clear responsibilities scale better.

What This Means for Engineers

Your role is shifting. You're no longer writing every instruction. You're designing how the system thinks and collaborates. This means defining roles, not just functions: what is each component responsible for, what can it decide, what must it escalate? It means designing conversations, not just APIs: how do components communicate, when do they need to talk, what information must be shared?

It means establishing guardrails so the Checker and Monitor can keep the system safe. It means guiding reasoning so the Reasoner has the right context and boundaries. It means building adaptive workflows where plans evolve as new information arrives. You're moving from "how do I code this step?" to "how do I design a team of capable parts that can figure out the steps themselves?"

The Composer: The Human in the Loop

Here's what's critical to understand: the AI orchestra doesn't compose itself. Just as a musical composer writes the score that musicians follow, a human Composer defines what roles exist and what they're responsible for, what information is required for each type of task, what constraints must always be respected, how roles should interact and when, and what the overall experience should feel like.

The AI doesn't invent its own architecture. It doesn't spontaneously decide how to interpret a request or what information is missing. You design the blueprints. The orchestra brings them to life. The Composer is the architect of intelligence. The orchestra executes the architecture.

What's Possible in 12 Months

When orchestration becomes standard practice, we'll see AI systems that actually complete complex tasks without constant human intervention, not because the LLM got smarter, but because specialized roles keep each other in check. We'll see explainable decisions where you can trace exactly which role made which choice and why, critical for regulated industries. We'll see adaptive systems that handle edge cases gracefully instead of failing catastrophically, because the Monitor catches problems and the Planner adjusts. We'll see collaborative AI that works alongside humans naturally, because the roles map to how humans already think about dividing work.

The Bottom Line

Intelligence, human or artificial, has never been about one brilliant component doing everything. It's about specialized capabilities working in harmony. The teams that understand this will build AI systems that are more reliable, more adaptable, more explainable, and more capable than anything achievable with a single model, no matter how powerful.

AI is not one mind. It is many specialized minds working in sync.

The future belongs to those who know how to orchestrate them.